2D-to-3D AR cartoons

This is an implementation of sketch based modelling for the generation of 3D models from 2D children’s drawings. Additionally, the generated models should be available in augmented reality for interaction with a robot. Inspired by the awesome works of [1], [2] and [3]. This was a project during my master’s studies, implemented in Java on Android with ARCore, libGDX and OpenCV.

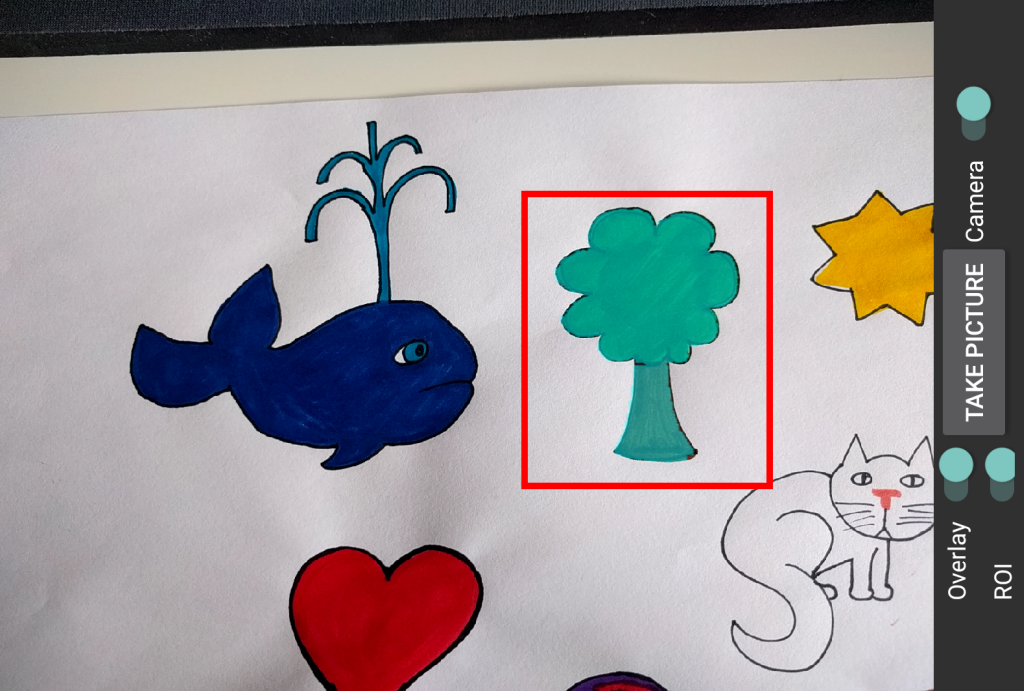

Acquisition

For the 2D drawing acquisition a photo can be taken through the camera activity. Additionally, a preview of the detected segments can be enabled and a region of interest selected.

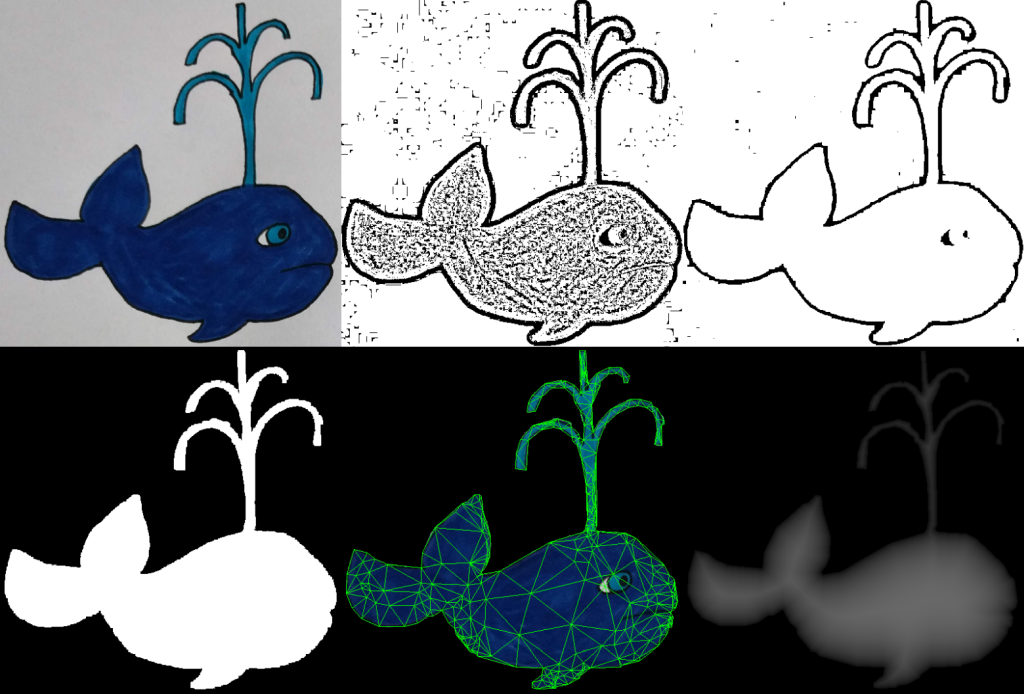

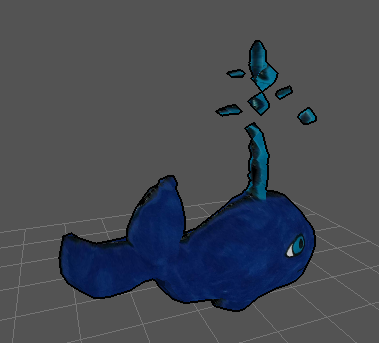

Segmentation and model generation

Next, the captured image goes through the processing pipeline to generate a 3D model. First, adaptive thresholding is applied to the saturation channel of the input image. Then, some morphological transformations help clean up the outline map. Enclosed regions are extracted into the region map for further processing. The map is triangulated and the result further refined by adding interior points to the mesh. Finally, a distance map is generated from the region map which is used to inflate the mesh along its z axis.

For debugging, the standalone desktop application (screenshot) allows for easy testing and tweaking of the 2D-to-3D process.

Robot interaction

For the robot interaction a fiducial marker is attached to a LEGO EV3 robot running LeJOS. The marker screen position is tracked and rays are cast based on two positions on the marker. The intersections of these rays with the AR plane allow the position of the robot to be translated into the AR world space. Finally, the position can be used to calculate distances which are sent to the robot as “virtual” distance sensor readings.